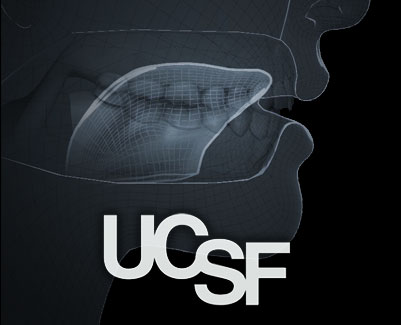

UCSF Decodes neural activity to produce synthesized speech

We created the visualization of the muscles activations detected in the brains of test subjects that were used to drive a speech synthesizer developed by UCSF Department of Neurological Surgery.

Client

University of California San Francisco

Our Task

Our goal was to help UCSF show how the muscles of the vocal tract move in concert to create speech which in this case resulted in a synthesized voice.

Production Stages

-

Process the audio

We received the audio from UCSF along with transcripts that described the content of the synthetic voice recordings.

-

Develop the look

Together with UCSF we designed the look of the vocal tract. The goal was to create a visual that in its style matched the feel of the synthesized voice.

-

Animate the model

By processing the synthesized voice audio clips we were able to automatically animated our chosen model of the vocal tract.

-

Rendering and compositing

The rendering was done in multiple passes that were composited together to arrive at the final “diagnostic” visual style we were aiming for.